Note: This portfolio site was launched on 30th March 2025. More stories, resources, and portfolio updates will be added progressively as I move forward and subject to available personal time.

Agent Optimization: Context Engineering for High-Accuracy Agentic Automation

Agent Optimization explores context engineering strategies in GitHub Copilot, demonstrating how structured context design improves reliability, stabilizes agentic patterns, and enables high-accuracy Agentic automation development.

TECHNICAL

Kiran Kumar Edupuganti

2/18/20264 min read

Agent Optimization: Context Engineering for High-Accuracy Agentic Automation

GitHub Copilot | Experience-Driven Insights

The portfolio reWireAdaptive, in association with the @reWirebyAutomation channel, presents an article on Agent Design. This article, titled "Agent Optimization: Context Engineering for High-Accuracy Agentic Automation", aims to explore and adopt Agent Optimization in the Context Engineering for High-Accuracy.

Introduction — Engineering Context for High-Accuracy Outcomes

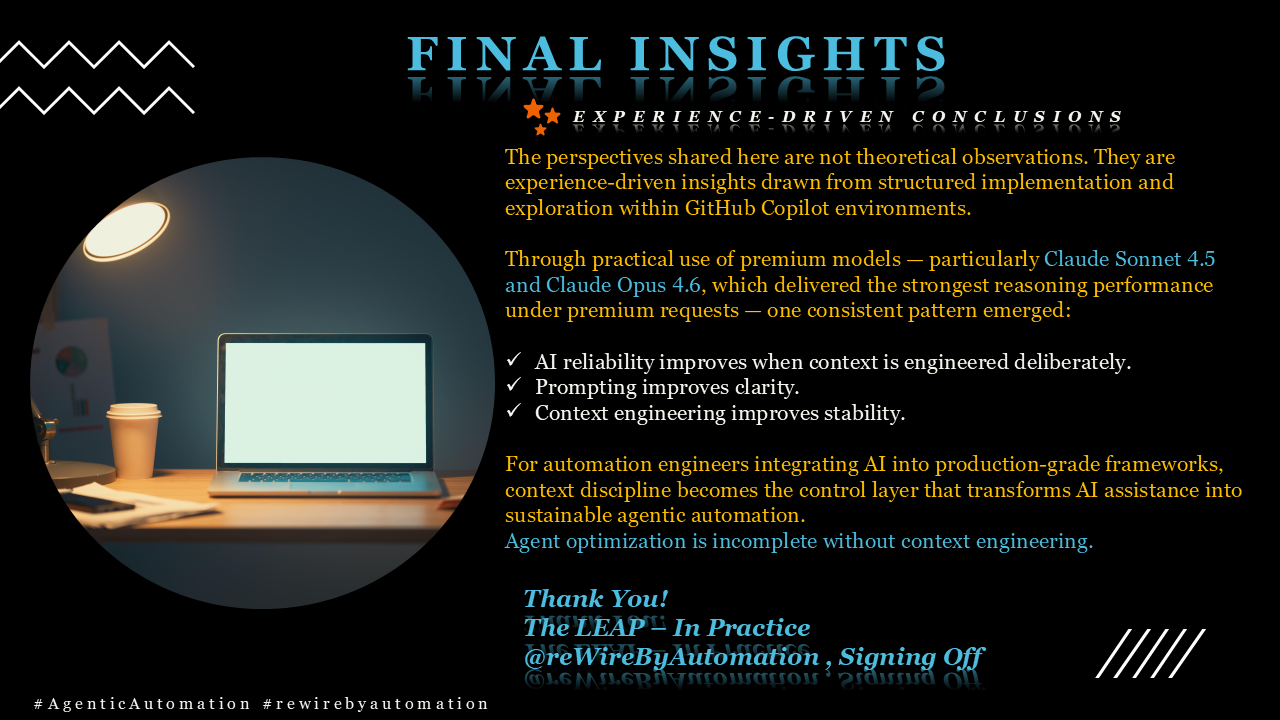

As AI-enabled development becomes embedded in modern IDE workflows, much of the discussion still revolves around prompt engineering — how to ask better questions to get better answers. However, through structured implementation and continuous exploration using GitHub Copilot in real automation projects, a deeper realization emerged:

High-accuracy AI output is not driven by prompting alone — it is driven by engineered context.

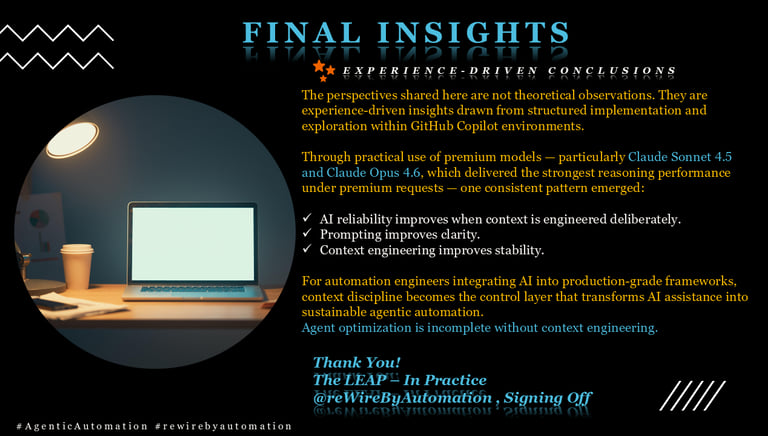

The insights shared in this article are experience-driven, drawn directly from hands-on implementation and structured exploration while working with GitHub Copilot in automation environments. Through iterative experimentation — particularly with premium models such as Claude Sonnet 4.5 and Claude Opus 4.6, which consistently delivered the strongest reasoning depth and architectural alignment — a clear pattern emerged:

Context discipline determines output reliability.

This article explains how context engineering influences automation stability, how it strengthens agentic automation patterns, and how it can be implemented deliberately in GitHub Copilot environments and integrated with IDEs like VSCode, IntelliJIDEA and PyCharm.

What Context Engineering Really Means in Automation Development

Context engineering is the deliberate shaping of the environment within which an AI model operates. Unlike deterministic programs, AI systems interpret surrounding signals before generating output.

In GitHub Copilot environments, context includes:

The active file

Adjacent files in the project

Repository structure

Logging style

Naming conventions

Previously generated code

Session memory

Instruction files

In practical automation projects such as RestAssured and Playwright, uncontrolled context gradually introduces architectural drift. Without guardrails, Copilot may:

Introducing new patterns unnecessarily

Suggest alternate assertion styles

Create utilities that diverge from established conventions

Change logging formats subtly

Recommend additional dependencies not aligned with the project design

Through implementation, it became evident that AI models do not fail randomly. They adapt to the signals they observe. If signals are inconsistent, output becomes inconsistent.

Context engineering is the mechanism that stabilizes those signals.

Why Context Engineering Matters to the Test Automation Community

Test Automation forums often focus on AI-generated scripts, productivity gains, and prompt templates. These discussions are important but incomplete.

In mature automation ecosystems, the critical question is not whether AI can generate code — it is whether AI can generate code without destabilizing the framework.

From implementation experience, the difference between productive AI integration and long-term technical debt lies in context discipline.

For automation engineers and teams:

It protects framework integrity.

It reduces flakiness introduced by inconsistent patterns.

It preserves maintainability.

It enables AI participation without structural damage.

This makes context engineering highly relevant to practitioners seeking sustainable AI integration rather than short-term experimentation.

How Context Engineering Strengthens Agentic Automation

Agentic automation implies that AI participates continuously in development — not just as a one-off assistant, but as a recurring collaborator.

However, agentic behavior depends on reinforcement of stable patterns.

When context is inconsistent:

The agent’s reasoning shifts between sessions.

Instruction adherence weakens.

Retry cycles increase.

Premium requests are consumed inefficiently.

During structured experimentation with Claude Sonnet 4.5 and Claude Opus 4.6 under premium requests, it became clear that even highly capable models perform best when context is structured and consistent.

Premium models amplify reasoning depth — but they still rely on contextual signals.

Strong context engineering leads to:

Stable agentic pattern reinforcement

Reduced retry loops

Faster convergence to correct solutions

Higher trust in AI outputs

Agent optimization, therefore, requires context stability as a foundational layer.

Practical Implementation in GitHub Copilot

The following methods were implemented and validated in real Copilot-driven workflows.

1. Repository-Level Instruction Anchoring

Creating a persistent instruction file, such as:

.github/copilot-instructions.md

Defining:

Framework rules

Assertion conventions

Logging standards

Dependency constraints

Naming rules

Retry policies

This significantly reduced output drift across sessions.

Premium requests delivered higher accuracy when these structural anchors existed.

2. Scope-Constrained Prompts

Broad prompts generate broad interpretations.

Instead of requesting large-scale changes, constraining scope yielded better results:

Refactor this class only.

Follow the existing RequestSpecification builder pattern.

Do not introduce new dependencies.

Preserve current logging format.

This dramatically reduced architectural deviation and premium retry cycles.

3. Session Reset Discipline

Session accumulation introduces noise.

Through experimentation, resetting sessions during topic shifts and re-establishing constraints improved reasoning consistency — particularly when using premium models.

Context reset prevents cross-topic contamination.

4. Context Minimization for Standard Models

When premium requests were exhausted, and standard models became necessary, context engineering became even more critical.

Standard models perform poorly under:

Large multi-file context

Long instruction chains

Complex architectural reasoning

Breaking tasks into smaller, isolated objectives significantly improved reliability.

This allowed continued productivity even under premium caps.

5. Pattern Reinforcement Strategy

Once a solution is aligned correctly with the framework design:

Reuse it.

Reinforce it.

Avoid allowing unnecessary variation.

Over time, Copilot began aligning more consistently with established patterns.

This is agentic reinforcement in practice.

Warning Signs of Weak Context Engineering

During experimentation, the following signals consistently indicated context instability:

Changing assertion styles

Random utility class generation

Inconsistent locator strategies

Logging format shifts

Dependency suggestions outside established patterns

Increased premium retries

These are not model weaknesses alone. They are context signals misaligned with framework expectations.

Stay tuned for the next article from rewireAdaptive portfolio

This is @reWireByAutomation, (Kiran Edupuganti) Signing Off!

With this, @reWireByAutomation has published a “Agent Optimization: Context Engineering for High-Accuracy Agentic Automation"